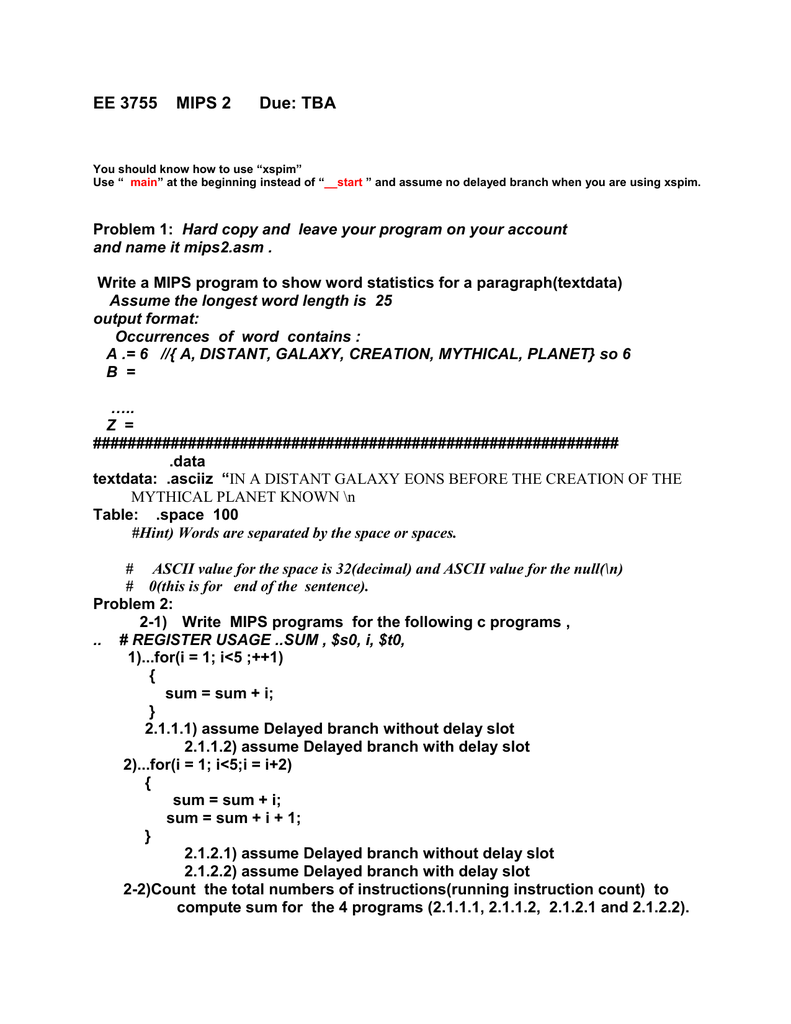

Delay Slot Instruction Mips

Posted By admin On 27/03/22Delay slots The MIPS is a pipelined architecture, and certain aspects of the pipeline are exposed to the programmer. In general, 'slow' instructions are not finished until the instruction.two. spaces after them is being fetched. The instruction in between is referred to as a 'delay slot'. In MIPS, executing a branch in a branch delay slot results in UNDETERMINED behavior. Conditional delay slot instructions. Things get more complicated when the delay-slot instruction is effectively predicated on the branch direction. SPARC supports 'annulled' branches in which the delay-slot instruction is not executed if the branch is not taken.

CS641 Class 26

Handout on MIPS and x86 Branch Examples

•Optimization #2: Redefine branches

•Old definition: if we take the branch, none of the instructions after the branch get executed by accident

•New definition: whether or not we take the branch, the single instruction immediately following the branch gets executed (called the branch-delay slot)

•Notes on Branch-Delay Slot

•Worst-Case Scenario: can always put a no-op in the branch-delay slot

•Better Case: can find an instruction preceding the branch which can be placed in the branch-delay slot without affecting flow of the program

•re-ordering instructions is a common method of speeding up programs

•compiler must be very smart in order to find instructions to do this

•usually can find such an instruction at least 50% of the time

•Jumps also have a delay slot…

Example: more or into branch delay slot:

Some RISCs like PowerPC and ARM do not have a delay slot, but for example MIPS, SPARC, PA-RISC have it.

°Instruction slot after a load is called “load delay slot”

°If that instruction uses the result of the load, then the hardware interlock will stall it for one cycle.

°If the compiler puts an unrelated instruction in that slot, then no stall

°Letting the hardware stall the instruction in the delay slot is equivalent to putting a nop in the slot(except the latter uses more code space)

Branches in MIPS and x86 code—see handout

Branch Prediction: Procedurization is not costly today

This is important to understand for software developers.A lot of people know a little about branch stalls and misapply it to simple calls and returns. Today’s processors are very clever at predicting branches involved in calls and returns, even if the target is computed, as we have seen in MIPS code.So procedurizing code has no serious overhead.In other words, the “call overhead” is not great.

Dynamic Branch Prediction techniques

Keep data by branch address, on how the branch has gone.

Simplest: one bit, but too simple

Next: two bits, used by AMD Opteron, the CPU for sf06

Idea of saturating up/down counter, shown on pg. 381, but very simple:

00 <--> 01 <--> 10 <--> 11go right if branches , left if not, stick at either end

predict nopredict

branchbranch

Example of loop execution:

body of loop

branch back, or not if done

Delay Slot Instruction Mips Bike Helmet

start at 10, because previous loop execution should have gone left one spot from 11 at end

first pass: predict branch, counter = 11

...

last pass: predict branch, wrong!, counter = 10

So we see just one missed prediction for the whole loop.

Delay Slot Instruction Mips Software

When the CPU predicts wrong, it finds out and has to flush the pipeline and start over on the right instructions—that’s the pipeline stall.

The Opteron has space for 16K such 2-bit counters

It has a 12-stage pipeline for integer instructions, and an 11-cycle penalty on branch mis-predictions. Ouch!It doesn’t use branch delay slots.

When the branch is predicted as taken, the processor needs to get the address from the instruction, requiring decode. Or it can tabulate that address as well as the 2bit count: Opteron does this too.

Inlining

Inlining is a trick to avoid call overhead.It is available in C++ and used commonly in serious code. It was important on the VAX, but much less so now.

In fact, inlining can slow code down, because the code expands in size and may overflow the CPU cache.Need to do this carefully.

But note that inlining is better software practice than using C preprocessor macros with arguments: it gets the compiler checking types, etc.

Loop unrolling

Example from pg. 397: add a constant to each element of an array:

original code: tight loop, doing one element in each pass

unrolled code: each pass does 4 elements, one after another

unrolled code takes 4 times fewer branches that original code—does this matter?

Not with good dynamic branch prediction: we saw one mis-prediction per loop execution, so same number either way here.

Conclusion: loop unrolling is not important with modern processors as a branch-avoiding trick.

Loop unrolling does turn out to be good in certain cases for multiple issue CPUs, considered just below.

Exceptions: a big problem for design, but not so much for performance, since they happen maybe every millionth instruction (syscalls, page faults)

Multiple issue CPUs: pg. 394-399

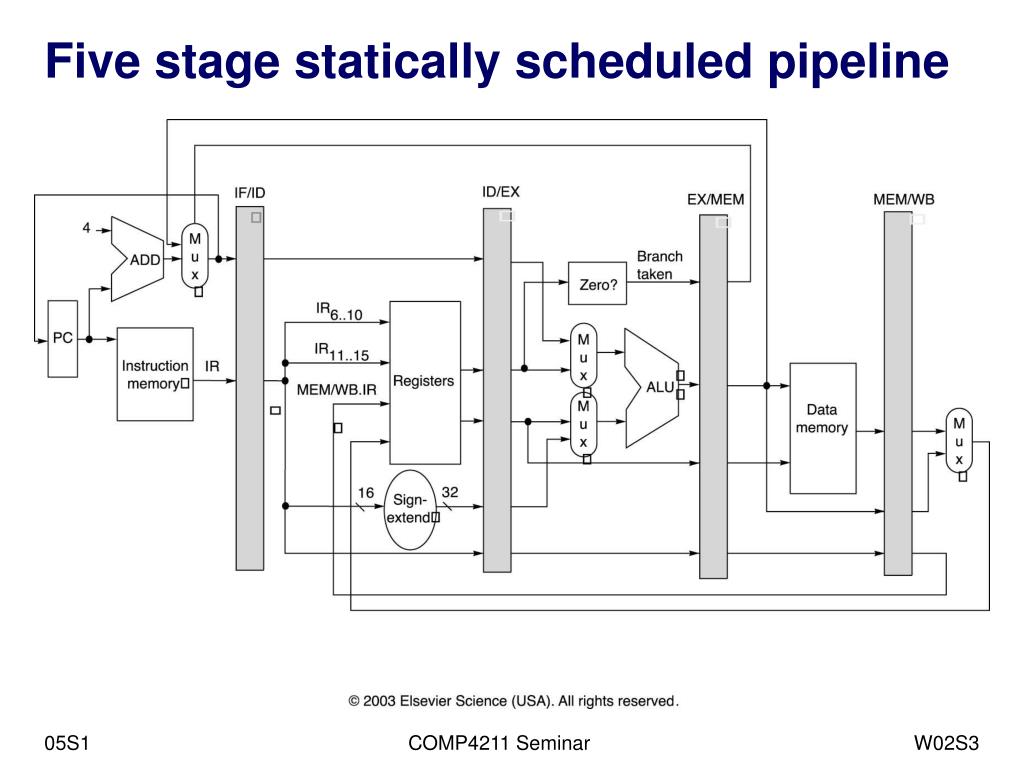

Our MIPS pipeline has only one ALU, so only one EX can happen per cycle.

Could have two ALUs, as shown on pg. 395, and be processing two instructions at a time.That means 2 instructions start on each cycle, the multiple-issue idea.

Or even more. The Opteron has 3 ALU, 3 AGUs (address gen units),3 floating point/multimedia processors, and 2 load/store units.

For multimedia, it implements the SSE streaming SIMD instructions, discussed in Sec. 7.6 (we brushed by this) and in Sec. 3.7, which we didn’t get to.

This sounds like the GPUs we looked at briefly, which can be said to be massively multiple-issue processors.

How does the Opteron handle those awful variable-length, irregular x86-64 instructions?Converts them to RISC micro-ops on the fly.

Info on Opteron processor is now linked the class web page.

Sec. 4.11: AMD Opteron X4, quad-core, descendent of CPU on sf06 (dual core)

Diagram on pg. 405 shows internal organization, with multiple ALUs, etc.

Loop unrolling for multiple-issue CPUs

pg. 397: an antidependency, or name dependency, is ordering forced by reuse of a named location, usually a register, rather than a “real data dependency”.

unrolled code, same example as above adding constant to all array element:

one pass uses

$t0 for one element

$t1 for next element

$t2 for next

$t3 for next

By giving the job to different registers, there are fewer data dependencies, and the ADDs can happen in parallel. This is called register renaming.

It takes a smart compiler to set this up.

Next time: final review, teacher evaluations